BWH Computational Pathology Special Seminar

BWH Computational Pathology Special Seminar

Title: Using deep learning models to debug regulatory genomics experiments and decode cis-regulatory syntax

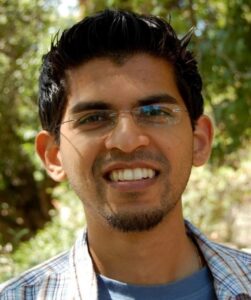

Speaker: Anshul Kundaje, PhD

Affiliation: Stanford University

Position: Associate Professor, Genetics and Computer Science

Date: Monday April 22, 2024

Time: 4:00PM-5:00PM ET

Zoom: https://partners.zoom.us/j/82163676866

Meeting ID: 821 6367 6866

Anshul Kundaje, PhD, is Associate Professor of Genetics and Computer Science at Stanford University. His primary research area is large-scale computational regulatory genomics. The Kundaje lab develops deep learning models of gene regulation and model interpretation methods to decipher non-coding DNA and genetic variation associated with disease. Dr. Kundaje has led computational efforts to develop widely used resources in collaboration with several NIH consortia including ENCODE, Roadmap Epigenomics and IGVF. Dr. Kundaje is a recipient of the 2016 NIH Director’s New Innovator Award and the 2014 Alfred Sloan Fellowship.

Links: The Encyclopedia of DNA Elements (ENCODE) Project, Stanford University, MIT